Jinrui Zhang

I am currently a postdoctoral researcher advised by Prof. Ju Ren in the Department of Computer Science and Technology, Tsinghua University. I received his B.Sc., M.Sc., Ph.D. degrees all in computer science, from Central South University, China, in 2016, 2018 and 2023, co-advised by Prof. Yaoxue Zhang and Prof. Deyu Zhang. I was a Visiting PhD Student at HCS Lab, Seoul National University in 2022-2023, working with Prof. Youngki Lee. I also joined HEX group at Microsoft Research Asia from Sept. 2019 to Mar. 2020 and Institute for AI Industry Research(AIR), Tsinghua University from Aug. 2021 to Apr. 2022 as a research intern, respectively, mentored by Prof. Yunxin Liu.

News

- [2023/08]New Our paper, "Ego3DPose: Capturing 3D Cues from Binocular Egocentric Views", was accepted by ACM SIGGRAPH Asia 2023! Congratulations to Taeho Kang and other great coauthors.

- [2023/07]New Our paper, "HiMoDepth: Efficient Training-free High-resolution On-device Depth Perception", was accepted by IEEE Transactions on Mobile Computing!

- [2022/08] Our paper, "MobiDepth: Real-Time Depth Estimation Using On-Device Dual Cameras", was accepted by ACM MobiCom'22 !

- [2022/01] Our paper,"MVPose: Realtime Multi-Person Pose Estimation using Motion Vector on Mobile Devices", was accepted by IEEE Transactions on Mobile Computing!

- [2021/08] I joined the Insitute for AI Industry Research(AIR), Tsinghua University. Mentor: Prof.Yunxin Liu.

- [2021/07] I recieved the invitation letter from Prof.Youngki Lee, Seoul National University and passed the Joint-Ph.D. application for China Schorlarship Council.

- [2021/04] Our paper,"Optimizing Federated Learning on Device Heterogeneity with A Sampling Strategy", was accepted by IEEE IWQoS 2021!

- [2020/09] Our paper, "MobiPose: Real-Time Multi-Person Pose Estimation on Mobile Devices", was accepted by ACM SenSys'20 !

Researchs

My research interests on mobile computing and edge computing, including AR/VR, intelligent edge/mobile systems, mobile sensing and applications of machine learning.

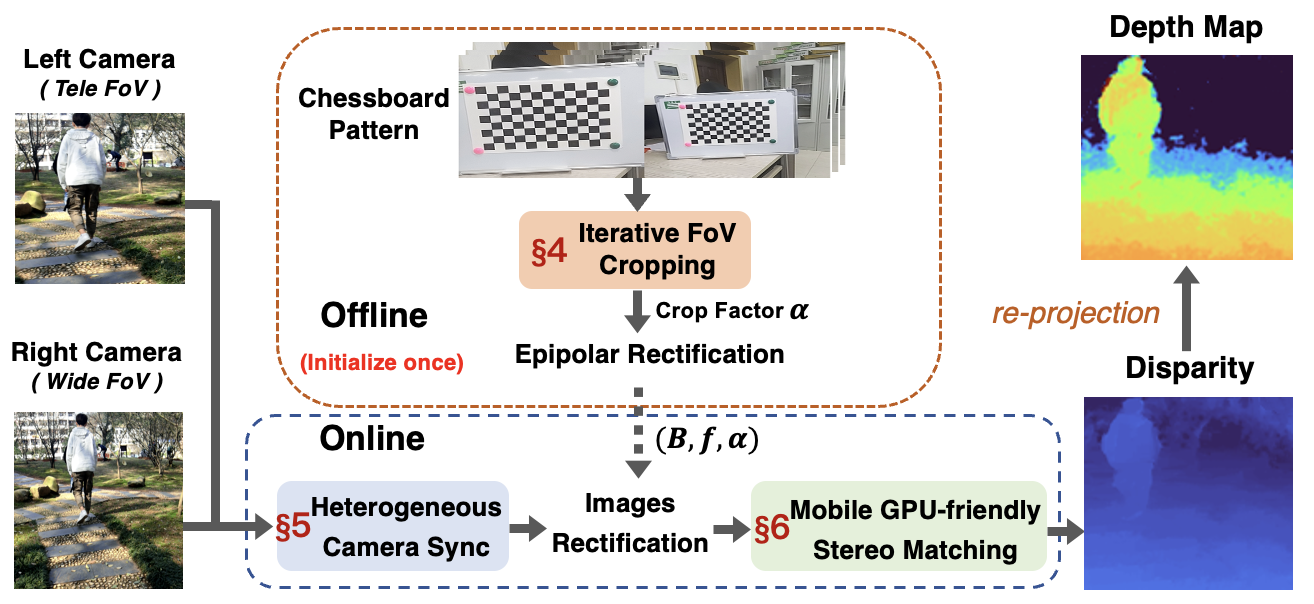

The system overview and workflow of MobiDepth.

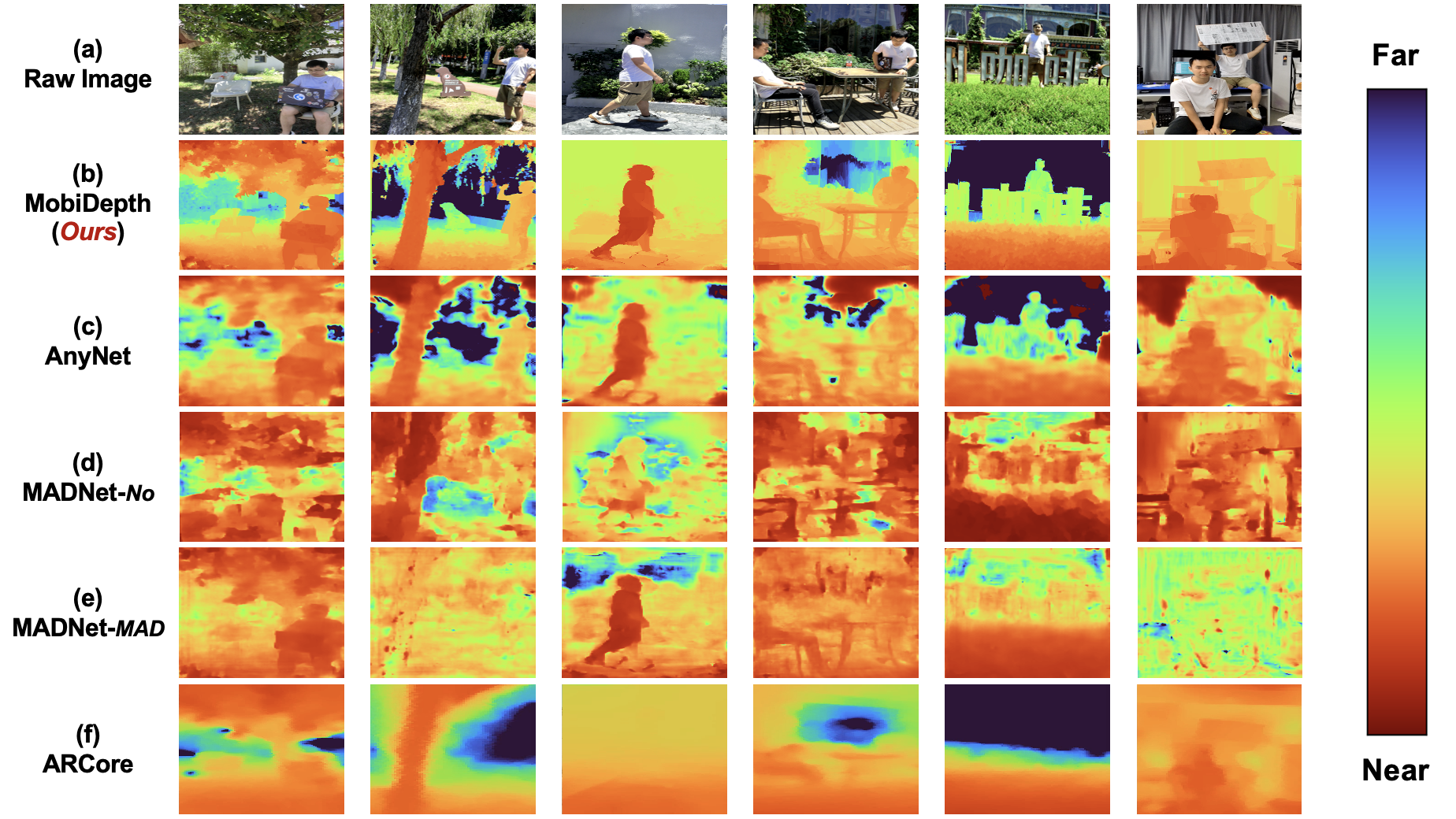

Example depth maps generated by MobiDepth, AnyNet, MADNet

with and without online adaptation (named MADNet-MAD and MADNet-No,

respectively), and ARCore, with the person sitting, walking and standing.

The initial idea was came up in about 2021 when dining with some friends at Beijing. While binocular depth estimation is a mature technique, it is challenging to realize the technique on commodity mobile devices due to the different focal lengths and unsynchronized frame flows of the on-device dual cameras and the heavy stereo-matching algorithm. Even the ARCore and the ARKit are the most common solution adopted by existing mobile systems, they requrie the camera to be moving and expects the target object to be stationary, which significantly restricts its usage scenarios. To this end, we propose MobiDepth, a real-time depth estimation system using the widely-available on-device dual cameras. MobiDepth resolves all the issues of the three existing solutions, i.e., it does not rely on any dedicated sensors or pre-training, and works well for target objects in motion.

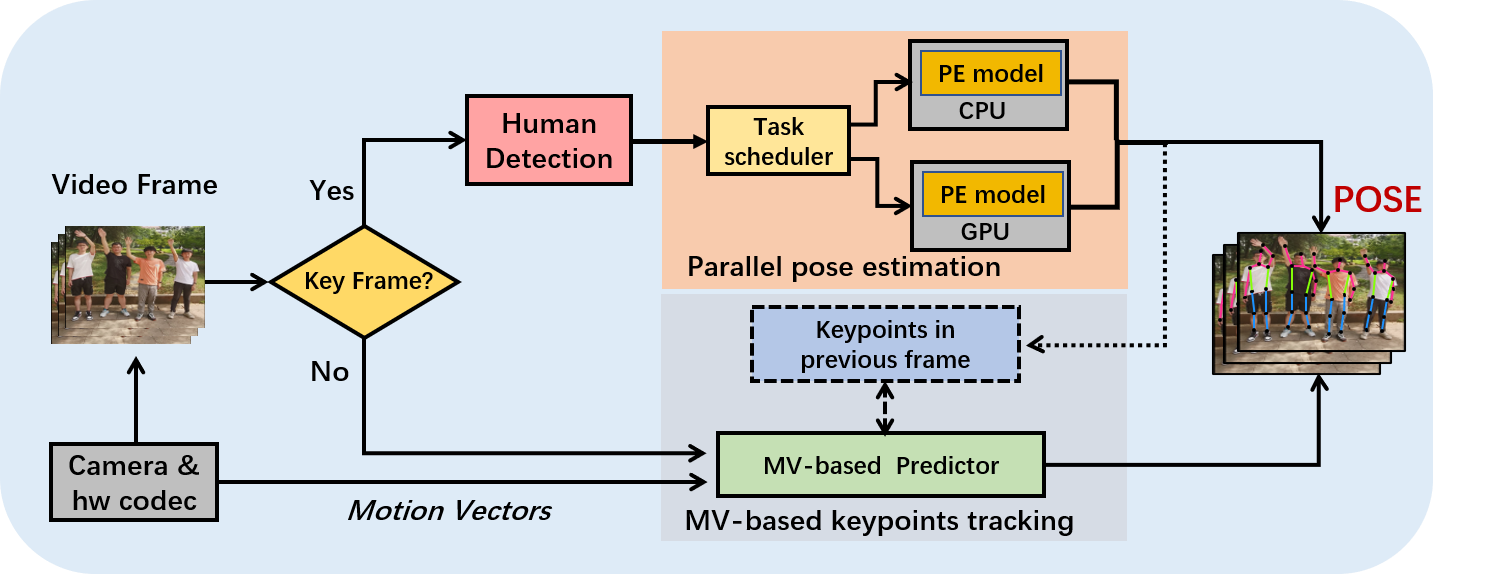

The system architecture and workflow of MVPose.

Example results of MVPose from live videos.

The initial verison of MobiPose was accepted by ACM SenSys 2020, which achieved over 20 frames per second pose estimation with 3 persons per frame, and significantly outperforms the state-of-the-art baseline(MobineNetV3-SSDLite+PoseNet), with a speedup of up to 4.5 and 2.8 in latency on CPU andGPU, respectively, and an improvement of 5.1% in pose-estimation model accuracy.

The second version, MVPose took a motion-vector-based approach to fast and accurately track the human keypoints across consecutive frames, rather than running expensive human-detection model and pose-estimation model for every frame, which achieved over 30 frames per second pose estimation with 4 persons per frame.

Publications

Conference Papers:

- Ego3DPose: Capturing 3D Cues from Binocular Egocentric Views

Author : T. Kang, K. Lee, J. Zhang, Y. Lee.

ACM SIGGRAPH Asia 2023 (CCF-A/Top-tier academic conference)

paper | code | video | slides | cite - MobiDepth: Real-Time Depth Estimation Using On-Device Dual Cameras

Author : J. Zhang, H. Yang, J. Ren, D. Zhang, B. He, T. Cao, Y. Li, Y. Zhang and Y. Liu.

ACM MobiCom 2022 (CCF-A/Top-tier academic conference)

paper | code | video | slides | cite - Optimizing Federated Learning on Device Heterogeneity with A Sampling Strategy

Author : X. Xu, S. Duan, J. Zhang, Y. Luo, D. Zhang

IEEE IWQoS 2021 (CCF-B)

paper | video | slides | cite - MobiPose: Real-Time Multi-Person Pose Estimation on Mobile Devices

Author : J. Zhang, D. Zhang, X. Xu, F. Jia, Y. Liu, X. Liu, J. Ren and Y. Zhang.

ACM SenSys 2020 (CCF-B/Top-tier academic conference)

paper | video | slides | cite

Journal Papers:

- HiMoDepth: Efficient Training-free High-resolution On-device Depth Perception

Author : J. Zhang, H. Yang, J. Ren, D. Zhang, B. He, Y. Lee, T. Cao, Y. Li, Y. Zhang and Y. Liu.

IEEE Transactions on Mobile Computing, to appear. (CCF-A/Top-tier academic journal)

paper | code | slides | cite - MVPose: Realtime Multi-Person Pose Estimation using Motion Vector on Mobile Devices

Author : J. Zhang, D. Zhang, H. Yang, Y. Liu,J. Ren, X. Xu, F. Jia, and Y. Zhang.

IEEE Transactions on Mobile Computing, to appear. (CCF-A/Top-tier academic journal)

paper | code | slides | cite

Patents

- 一种移动端的多人人体骨架识别方法及装置. ID: 202010952810.5

Services

- Journal Reviewer

- IEEE Transactions on Mobile Computing (TMC)

Experiences

- Tsinghua University, AIR(2021.08-2022.05) Research Intern Mentor : Yunxin Liu

- Microsoft Research Asia(2020.09-2021.03) Research Intern Mentor : Yunxin Liu

- Peking University(2020.03-2020.07) Visting Student Mentor : Xuanzhe Liu

Awards

- The 10th National Smart Car Contest National Grand Prize

- The 10th Smart Car Contest in South China Champion of South China

- Mathematical Contest in Modeling(MCM) Meritorious Winner

- The 7th Hunan Provincial Smart Car Contest First Prize

- The 2th Hunan Provincial Postgraduate AI Innovation Contest First Prize

Honors

- Encouragement Scholarship of Central South University (2021)

- China Scholarship Council (CSC) scholarships (2021)

- Outstanding Student Cadre of Central South University (2020)

- Excellent Student Cadre of Central South University (2018/2019)

- Excellent Student of Central South University (2017/2021)

- Outstanding Graduate of Hunan Province (2016)